In previous blog posts we covered how Experimentation teams are using metrics such as conversion rates and revenue as sole indicators of the success of their experimentation programs. We made the case on why they are vanity metrics. (You can read part 1 here and part 2 here)

In this final part, we will cover another vanity metric which may give the impression that the program is succeeding but in fact doesn’t give us the full picture.

A term that comes up often in the Experimentation practitioner circles is “Culture of Experimentation”. When pushed to define this term, it’s often summarised as

a) Making the everyone excited and enthusiastic about experimentation and testing

b) Sharing everything with stakeholders and team members

c) Showing them the value of Experimentation.

d) Get them running their own experiments

Whilst these are all noble goals and will certainly help grow experimentation, it doesn’t really give you much practical or actionable steps to achieve it.

Experimentation teams are focussed on growing the maturity of experimentation in their organizations and enabling other teams with the capabilities of running their own experiments. After convincing them through roadshows, workshops, presentations and trainings, they equip them with tools and systems in the hope that the experimentation practice will grow.

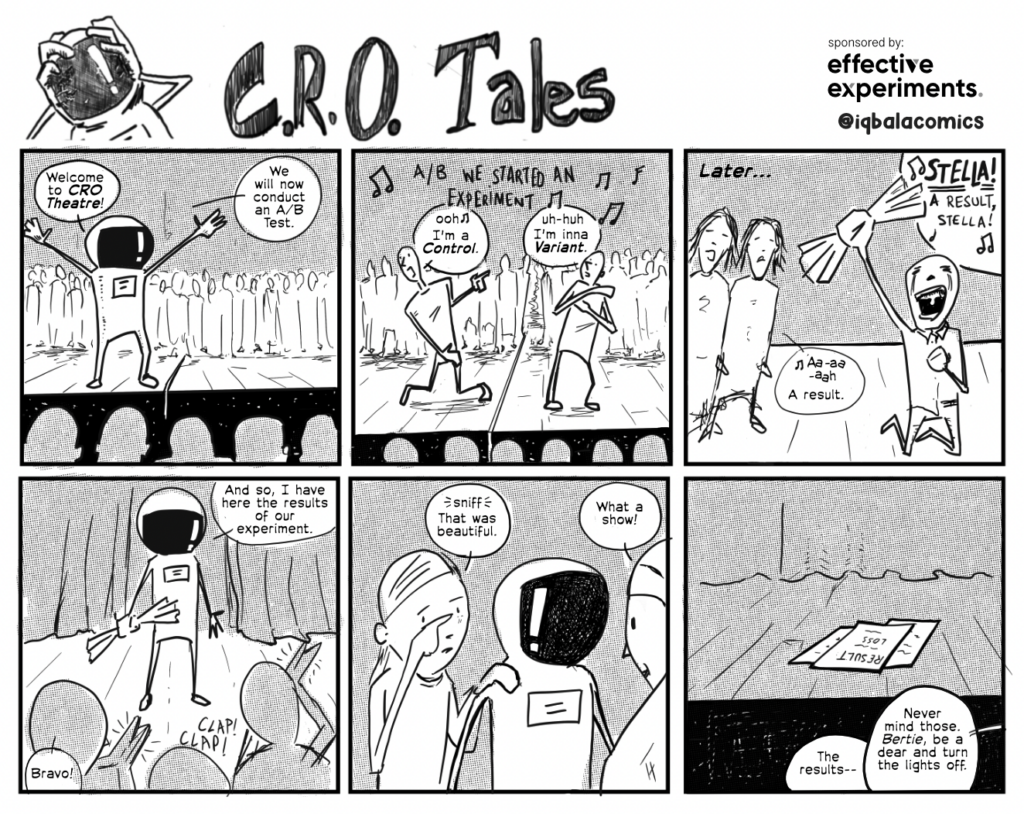

To measure this growth you would count the number of individuals, teams or units actively involved in experimentation. This Program Growth Metric would tell you whether the organization’s adoption of Experimentation is moving in the right direction.

On it’s own Program Growth is a strong metric…when measured correctly.

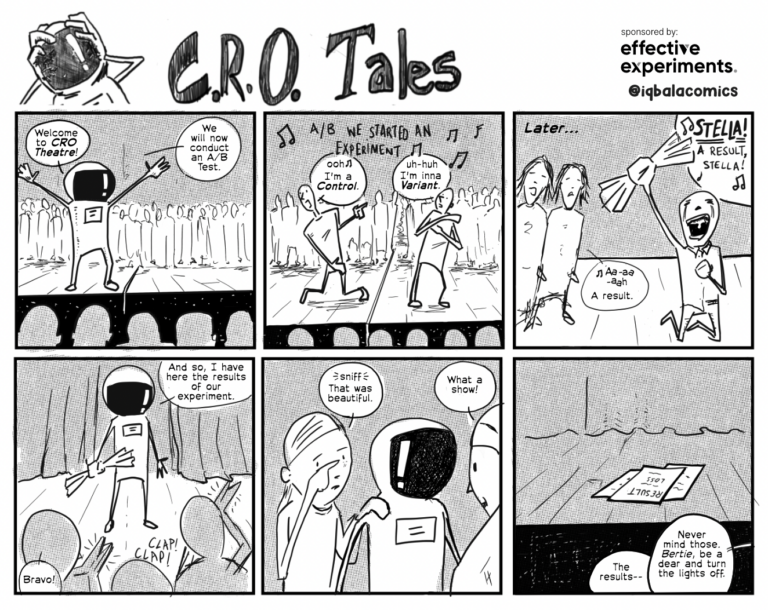

A recurring pattern that we have seen in the industry is that the approach to growing the experimentation program is haphazard and chaotic. Here is a typical scenario we have noticed when an experimentation team (centre of excellence) or specialists try to onboard other teams.

There is an initial outreach to the teams to convince them why they should run their own tests. This is followed by a training session on tools and access to said tools. After which, the cohort of individuals trained are left to their own devices for the forseeable future.

The experimentation team has checked their box. A new team has been onboarded and Experimentation has grown in the organization. What was previously 1 team has now grown to 2 teams. And the journey continues.

The reality however is that onboarding a team especially one that is brand new to the experimentation practice requires a lot of strategy, nuances when it comes to convincing them and training them and can take a lot of time. When this isn’t in place, the new cohorts do not adopt the practices in a meaningful way. There is no coaching or support that can help them iron our their mistakes and get better in time. This results in poorly executed experiment planning and design or worse still, barely any increase in experiments run.

We have previously published content on why Experimentation is not set up for success in organizations owing to the way it is embedded.

Experimentation practitioners, who are already burdened with the technical and practical side of research, ideation, experimentation and analysis, are suddenly thrust into the roles of Change Makers. They are stretched in terms of their bandwidth and as such struggle to track the progress, monitor for red flags and coach the new teams on a consistent basis.

Program growth when measured incorrectly can be a vanity metric when you don’t factor in the maturity and capabilities of the new teams.